A Brief History of Work Time [Comic]

2 min read

Caught up on all your work? Check out the material below if you want to have some fun! OG Toggl Comics, infographics, and more.

Here are our top articles starting from the basics.

The only difference between programming and games is that...

Some of these remote workspaces–such as the afterlife–might not...

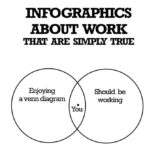

These infographics about work present such important observations as...

A couple of years ago I had what looked...